Performance tuning SELECT statements can be a time consuming task which in my opinion follows Pareto principle’s. 20% effort is likely give you an 80% performance improvement. To get another 20% performance improvement you probably need to spend 80% of the time. Unless you work on the planet Venus where each day on Venus is equal to 243 Earth days, delivery deadlines are likely to mean you will not have enough time to put into tuning your SQL queries.

After years writing and running SQL statements I began to develop a mental check-list of things I looked at when trying to improve query performance. These are the things I check before moving on to query plans and reading the sometimes complicated documentation of the database I am working on. My check-list is by no means comprehensive or scientific, more like a back of the envelope calculation but can I can say that most of the time I do get performance improvements following these simple steps. The check-list follows.

Check indexes

There should be indexes on all fields used in the WHERE and JOIN portions of the SQL statement. Take the 3-Minute SQL performance test. Regardless of your score be sure to read through the answers as they are informative.

Limit size of your working data set

Examine the tables used in the SELECT statement to see if you can apply filters in the WHERE clause of your statement. A classic example is when a query initially worked well when there were only a few thousand rows in the table. As the application grew the query slowed down. The solution may be as simple as restricting the query to looking at the current month’s data.

When you have queries that have sub-selects, look to apply filtering to the inner statement of the sub-selects as opposed to the outer statements.

Only select fields you need

Extra fields often increase the grain of the data returned and thus result in more (detailed) data being returned to the SQL client. Additionally:

- When using reporting and analytical applications, sometimes the slow report performance is because the reporting tool has to do the aggregation as data is received in detailed form.

- Occasionally the query may run quickly enough but your problem could be a network related issue as large amounts of detailed data are sent to the reporting server across the network.

- When using a column-oriented DBMS only the columns you have selected will be read from disk, the less columns you include in your query the less IO overhead.

Remove unnecessary tables

The reasons for removing unnecessary tables are the same as the reasons for removing fields not needed in the select statement.

Writing SQL statements is a process that usually takes a number of iterations as you write and test your SQL statements. During development it is possible that you add tables to the query that may not have any impact on the data returned by the SQL code. Once the SQL is correct I find many people do not review their script and remove tables that do not have any impact or use in the final data returned. By removing the JOINS to these unnecessary tables you reduce the amount of processing the database has to do. Sometimes, much like removing columns you may find your reduce the data bring brought back by the database.

Remove OUTER JOINS

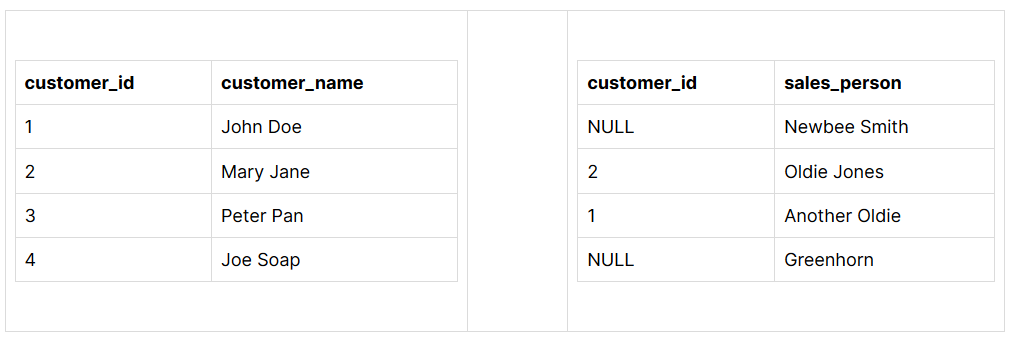

This can easier said than done and depends on how much influence you have in changing table content. One solution is to remove OUTER JOINS by placing placeholder rows in both tables. Say you have the following tables with an OUTER JOIN defined to ensure all data is returned:

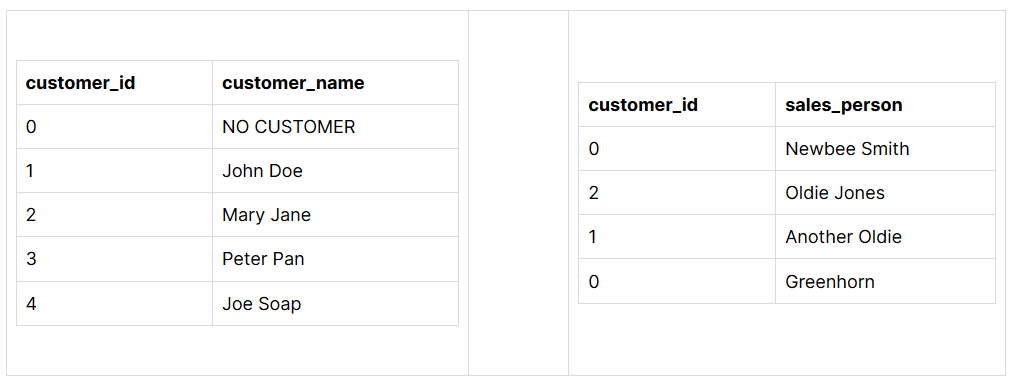

The solution is to add a placeholder row in the customer table and update all NULL values in the sales table to the placeholder key.

No only have you removed the need for an OUTER JOIN you have also standardised how sales people with no customers are represented. Other developers will not have to write statements such as ISNULL(customer_id, “No customer yet”).

Remove calculated fields in JOIN and WHERE clauses

This is another one of those that may at times be easier said than done depending on your permissions to make changes to the schema. This can be done by creating a field with the calculated values used in the join on the table. Given the following SQL statement:

FROM

sales a

JOIN budget b ON ((year(a.sale_date)* 100) + month(a.sale_date)) = b.budget_year_month

Performance can be improved by adding a column with the year and month in the sales table. The updated SQL statement would be as follows:

SELECT * FROM PRODUCTSFROM sales a JOIN budget b ON a.sale_year_month = b.budget_year_month

SELECT

*

FROM

sales a

JOIN budget b ON a.sale_year_month = b.budget_year_month

Conclusion

The recommendations boil down to a few short pointers

- check for indexes

- work with the smallest data set required

- remove unnecessary fields and tables and

- remove calculations in your JOIN and WHERE clauses.

If all these recommendations fail to improve your SQL query performance my last suggestion is you move to Venus. All you will need is a single day to tune your SQL.